Most people don't read a word or a text letter by letter, because our brain is so intelligent that it can grasp the context and recognise a word without reading each individual letter. According to some research, a computer can do this too, but it works differently: it learns the shape of a word and can then recognise it based on its shape. How this works in detail and what we can achieve with it, you will find out in this blog article.

The different processing mechanisms of natural language

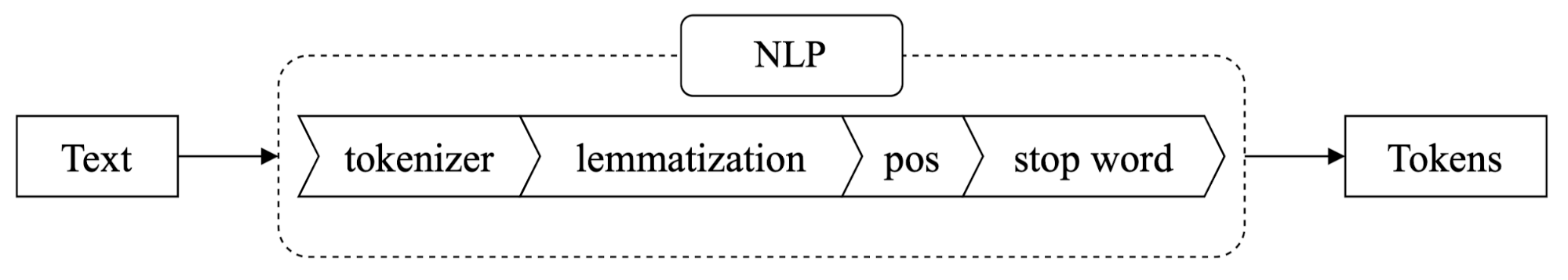

The first step a computer goes through to process a text is called «Natural Language Processing» (NLP for short). In this step, texts are automatically analysed and normalised for further processing. For example, the word 'was' becomes 'be' or 'don't' becomes 'do not'. This step requires a lot of computing time to put the data to be processed into the desired form, but it is necessary for a machine to understand the word or text at all. The NLP process consists of four sub-steps. After their successful completion, the input text is converted into so-called «tokens» (see «Tokenization»).

Tokenization

The first substep consists of segmenting text into words and punctuation marks. The segments are generated language-specifically: 'won't' or 'can't' become 'will not' or 'can not'. Punctuation marks are not eliminated because a point at the end of a sentence is to be split off, but points in the abbreviation of United Kingdom (U.K.) are to be retained.

Lemmatization/Stemming

In the next substep, words are assigned to their root («lemma»). For example, the lemma of 'was' is 'to be' and the lemma of 'women' is 'woman'. Frequently used words can thus be grouped and counted as words with the same meaning. This is an important prerequisite for the so-called clustering procedure, since frequency has a strong influence on the individual calculations of the procedure. At the same time, tracing words back to their word roots is very computationally intensive, since each individual word must be compared to a dictionary.

Part-of-Speech (POS) Tagging

The next step in the NLP process is called part-of-speech (POS) tagging, which is the process of assigning parts of words to tokens such as a verb or a noun. With this information, nouns can be prioritised over verbs.

Stopword Elimination/Alpha Extraction

Stopword elimination in the last step filters out frequently occurring words in languages that have no context. Examples of stopwords are 'and', 'on', or 'I'. Alpha extraction filters out stand-alone punctuation marks and numbers.

The result of the NLP process is a «Bag of Word (BoW)», i.e. the most important words of a text. These words are then vectorised to be put into a computer-understandable form. For example, the BoW from the sentence 'This is a beautiful apple that costs 5 euros' is ['beautiful', 'apple', 'euro', 'cost']. We will continue to work with this BoW in the following.

Unsupervised learning

When we watch a movie or read a book, we can usually answer the following questions quickly: What is the story about? What does it refer to? What does it mean? A machine can't. It needs contextualisation for this on the basis of the Bag of Words. To do this, it summarises the elements from the BoW that are similar. This mechanism is called «unsupervised learning.» Unsupervised learning refers to «the organisation of unlabeled data into similarity groups called clusters.» In our case, the unlabeled data is the vectorised Bag of Words and the similarity groups are the similar items to the things we want to know.

The reading Artificial Intelligence «KenSpace»

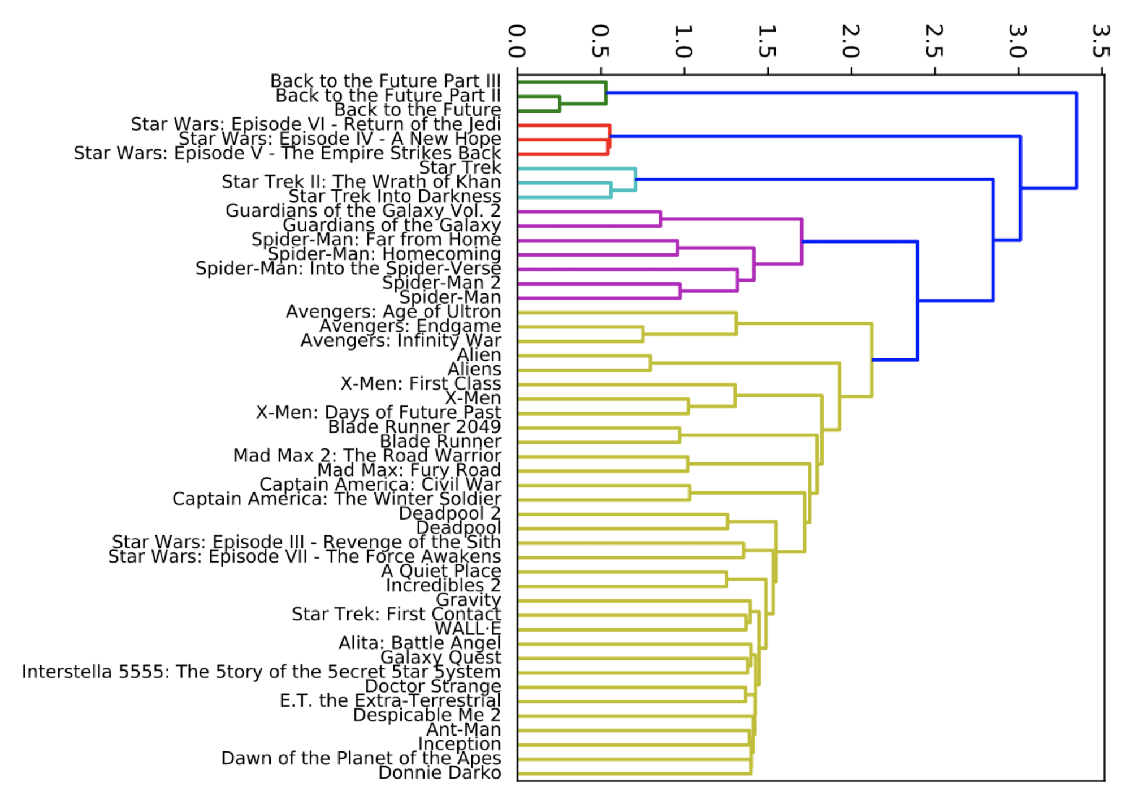

In the bachelor thesis «KenSpace: Exploratory and Complex Searches on Unstructured Documents» by myself and Stefan Brunner at the Zurich University of Applied Sciences, we presented different strategies on how to summarise items. The mathematical part behind unsupervised learning is not covered in this article, here we only present the results. Using movie data, we can already see that the clustering method works very well:

At level 3.5, two different science fiction groups are formed. One group contains the Back to the Future film series, the other Star Wars and Star Trek films. The first group on level 3 contains the Star Wars episodes IV to VI, while the other group on level 3 consists of intermixed films such as Star Trek, Spider Man or Guardians of the Galaxy. At this level, the variance is already larger than at level 3.5. At the next lower level, two groups are again formed according to the same principle by reusing and expanding with new films in a part of the upper group. Thus, the variance minimisation of the respective subdivisions at the middle to lower levels is about the same. Regarding the film data, the lowest level is very well structured. On this level, the respective film series are presented individually. (Source: Bachelor thesis «KenSpace: Explorative and complex searches in unstructured documents»).

Demonstration of a reading Artificial Intelligence using data from Schuler Auctions

The Schuler auction house in Zurich has a comprehensive dataset of all items ever offered for sale in an auction. We were kindly allowed to use this data for our work with KenSpace. All data from Schuler Auctions describe an item, which consists of a title and a description. We used Artificial Intelligence (AI) to merge and analyze these two things.

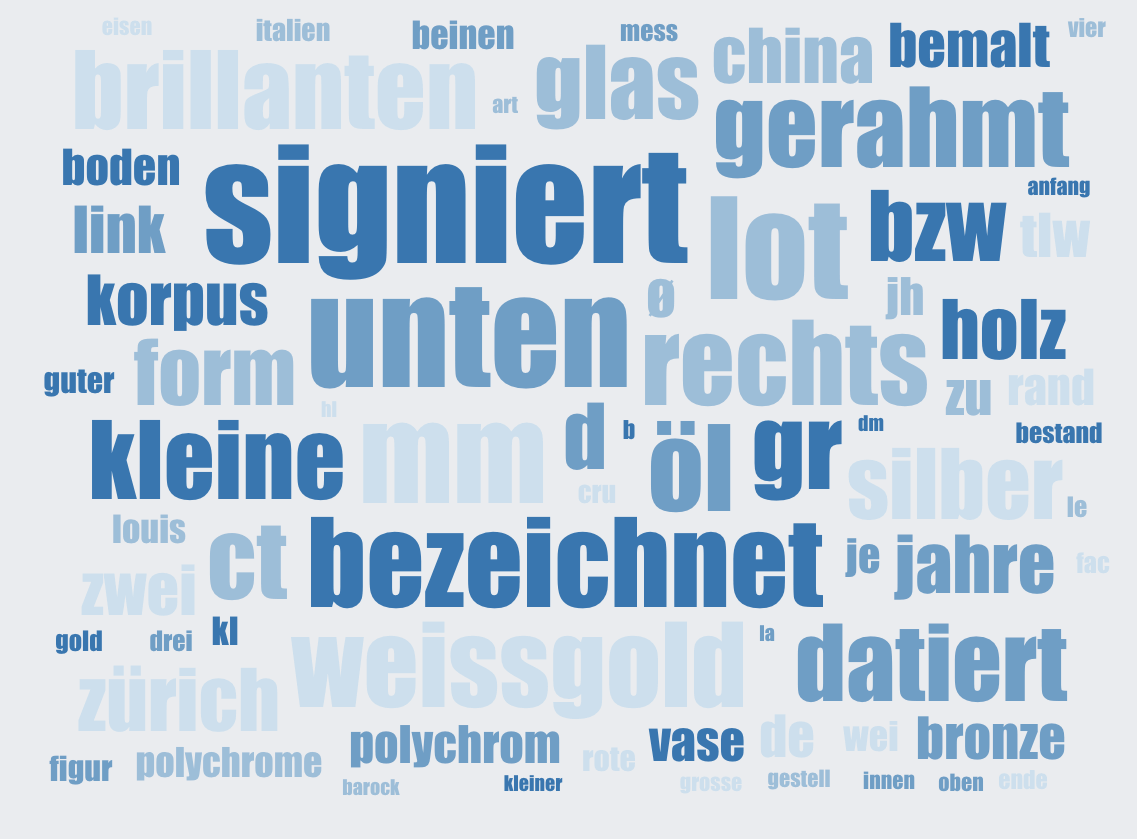

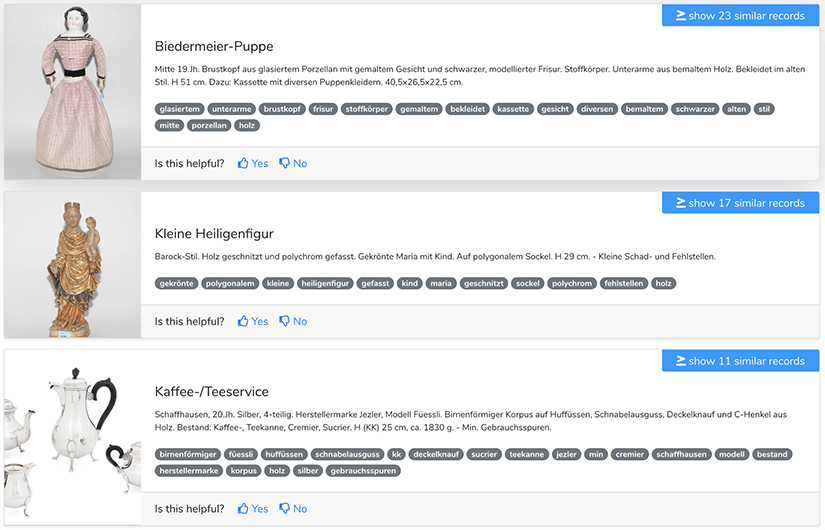

KenSpace's WordClould shows the most frequently used words. There are already very good keywords in this WordClould that could be used to search the articles. For example 'wood', 'silver' and 'corpus'. If we now click on the keyword 'wood', only items which are made of wood are already displayed.

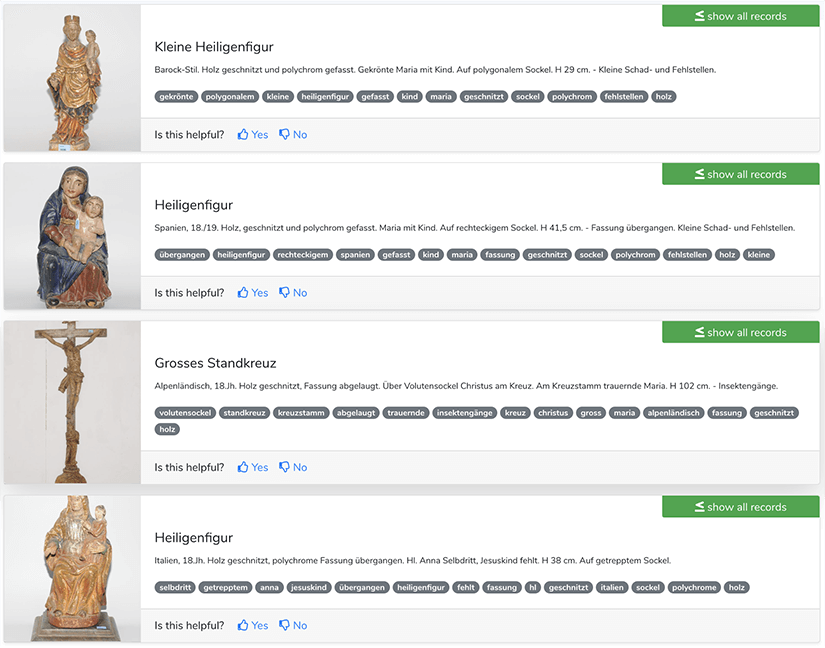

If we now want to find similar items to the wooden figure, we simply click on 'show similar records'.

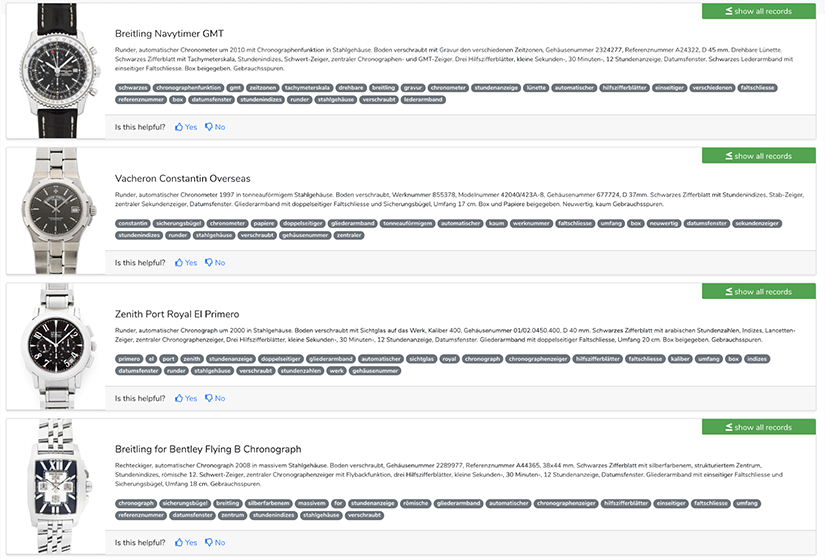

KenSpace's AI will now find and display the matching results. Without any knowledge of what a figure looks like or what wood is, KenSpace's AI is able to recognize the figure and display similar items, which is very fascinating. Another example would be finding a nice watch. Again, it would be no problem for the AI to suggest similar watches. So the result is quite amazing.

The four requirements for Artificial Intelligence to work

KenSpace's remarkable result does not come without a lot of effort. As mentioned at the beginning of this blog post, there are a lot of things to do before creating an AI that can understand and summarise text:

1. Proper preparation of data

Before a dataset can be successfully processed, it is mandatory to clean it and put it through automated preprocessing. For this purpose, we use NLP for language understanding, for example, and translate the data into a computer-understandable form. The amount of data is also crucial for success. The more data we have available, the better. Too much data, however, can also be bad. The correctness of the data is important.

2. Training of the model

To train the model properly so that it can group the elements correctly, we use unsupervised learning. Since this step can take a lot of time, it is recommended to save this process in between.

3. Evaluation of the input

If we use unsupervised learning, this step must be done manually. For this, we need a golden standard that can compare the data. For example, if we are working with movies, we can check if the movies being processed belong to the same genre.

When we work with supervised learning, we can easily compare our results with the test data. The performance is mostly evaluated by F-score.

4. Performance evaluation

This is the most important step. The F-score in unsupervised learning is just a number. It could be high and still perform very poorly. So it is imperative to check with a user study or by yourself if the results are grouped correctly. We humans, unlike computers, tend to see a pattern in things where there is none. So it can also happen that we get something better than expected by pure chance. (See more on this phenomenon at Apophenia).

Conclusion

Teaching computers to read is possible and brings many benefits such as rapid grouping and searching of data content. However, before AI is capable of reading, various steps need to be taken especially in preparing the available data. With the AI KenSpace, which Stefan Brunner and I developed, results can be retrieved quickly and clearly.

If you want to know more about reading computers, KenSpace or the successful processing of data for Artificial Intelligence, please contact us! We look forward to hearing from you.