We are at the beginning of 2023, artificial intelligence (AI) is getting more attention than ever. It is already a part of our daily lives. We are using it in our homes, in our cars, in our offices, in our schools, in our hospitals, in our factories, in our cities, in our world. It helps us to make our lives easier, better, more productive and more healthy. ChatGPT and DALL·E 2 were introduced last year, and I think that was the first time many people realized how powerful an AI can be. Both tools belong to the GPT-3 family.

In this blog entry, we want to highlight the limitations and responsibility we as engineers need to tackle when developing AI. We already talked about GPT «Generative Pre-trained Transformer» and its limitations in our previous blog post about AI art – generating images with words and we even created a Valentine's Day card based solely on AI.

Some of you might ask yourself «will AI replace us» or even «will AI destroy us» as seen in many science fiction movies. When reading about the responsibility of AI, you most likely come back to the «Three Laws of Robotics» by Isaac Asimov, written in 1942. One of them is «A robot may not harm humanity, or, by inaction, allow humanity to come to harm». Currently, there are just a few laws that enforce the creators of AIs to be responsible for the actions and harms that an AI could cause the world. However, the truth is that AI won't harm us, but the people who create it will.

The fear of AI taking over humanity and causing harm is rooted in the idea that machines can become autonomous and operate without human intervention. People often discuss the term singularity: The singularity refers to a hypothetical point in the future at which artificial intelligence surpasses human intelligence and becomes self-improving. While the rise of AI is exponential, it is simply a tool that is designed, created, and operated by humans. It is true that AI can be programmed to perform complex tasks and make decisions, it is still bound by the limitations of its programming and the data it has been trained on. In AI research, there are some important things to consider, trying to make a responsible AI:

Bias

AI models are trained on a large dataset of text from the internet, which may contain biases and stereotypes. This can lead to the model producing biased, discriminatory output or wrong statements. For a list of Biases, refer to the blog post from Google: Fairness: Types of Bias | Machine Learning | Google Developers

We cannot argue that a pedestrian detector is safe simply because it performs well on a large data set, because that data set may well omit important, but rare, phenomena (for example, people mounting bicycles). We wouldn’t want our automated driver to run over a pedestrian who happened to do something unusual.

Peter Norvig and Stuart J. Russell, Artificial Intelligence: A Modern Approach.

Misinformation

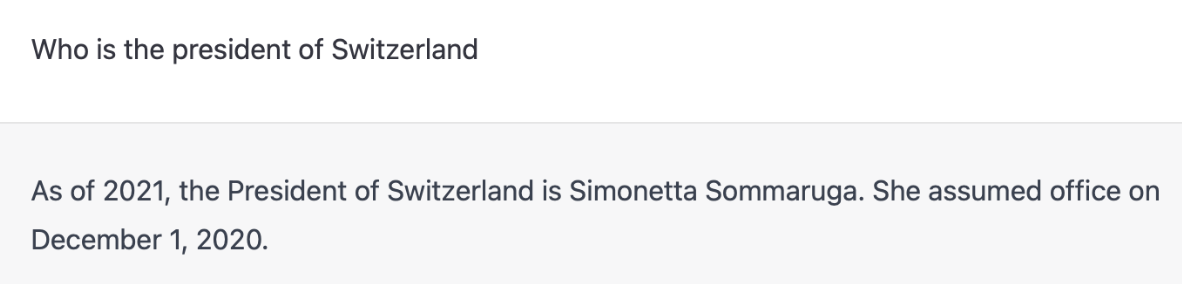

As mentioned in the Bias, AI could be trained on wrong data. GPT can generate text that is factually incorrect or misleading, while still sounding correct, spreading misinformation:

ChatGPTs answer to the question, who the president of Switzerland is

OpenAI's new tool, WebGPT, is designed to improve the accuracy and truthfulness of information generated by GPT-3 by training it on a more diverse set of sources, including books, websites, and scientific journals. This can improve the quality of information generated by GPT-3 for a wide range of applications, including chatbots, virtual assistants, and search engines. See WebGPT: Improving the Factual Accuracy of Language Models through Web Browsing

Lack of understanding

GPT is a language model that does not have the ability to understand the meaning of the text it generates. This can lead to it producing nonsensical or irrelevant output.

Privacy and Safety

AI can be fine-tuned on personal data, which could potentially be used to generate sensitive information about individuals like fake videos, audio, and images that appear to be real. This technology, known as deep fakes, can be used to manipulate information and create false narratives, leading to misinformation and even defamation. See also Forecasting Potential Misuses of Language Models for Disinformation Campaigns—and How to Reduce Risk

Moreover, deep fakes pose serious privacy concerns, as they allow anyone to generate videos of someone else's face and voice without their consent. This can result in significant harm because individuals may be depicted in compromising or illegal situations without their knowledge or consent. Have a look at the last section of our previous blog post about deep fakes.

Lack of control

GPT can generate a wide range of text, including text that the user may not have intended or expected.

Therefore, the responsibility for any harm caused by AI lies with the people who create it, as seen in these examples. The potential for AI to cause harm can come from various factors such as biased or incomplete data, poor programming, or malicious intent. The creators of AI must ensure that their technology is developed ethically, responsibly, and with the potential impact on society in mind. It is important to note that these risks are not inherent to AI itself, but rather are a result of the ways in which it is designed, developed, and deployed.

Regulation principles

However, the responsibility for AI doesn't just fall on the creators. It is also up to governments and organizations to regulate the development and deployment of AI. Regulation should be aimed at ensuring that AI is used ethically, and that the technology is developed in a way that benefits society as a whole. One community that suggests principles that could be used as regulation, is FAT/ML: Fairness, Accountability, and Transparency in Machine Learning.

They suggest the following principles for socially acceptable algorithms:

- Responsibility: Make available externally visible avenues of redress for adverse individual or societal effects of an algorithmic decision system, and designate an internal role for the person who is responsible for the timely remedy of such issues.

- Explainability: Ensure that algorithmic decisions as well as any data driving those decisions can be explained to end-users and other stakeholders in non-technical terms.

- Accuracy: Identify, log, and articulate sources of error and uncertainty throughout the algorithm and its data sources so that expected and worst-case implications can be understood and inform mitigation procedures.

- Auditability: Enable interested third parties to probe, understand, and review the behaviour of the algorithm through disclosure of information that enables monitoring, checking, or criticism, including through provision of detailed documentation, technically suitable APIs, and permissive terms of use.

- Fairness: Ensure that algorithmic decisions do not create discriminatory or unjust impacts when comparing across different demographics (e.g. race, sex, etc).

Those principles are also embedded in our core values at Renuo: Responsibility and Fairness and Transparency and Together.

As mentioned, the responsibility for any harm caused by AI ultimately lies with the people who create and deploy it. This means that at Renuo, we believe that it is our responsibility to ensure that any AI technology we develop is ethical, responsible, and has a positive impact on society. We also believe in developing AI systems that are free from bias and treat all individuals fairly and equally.

Transparency is also crucial when it comes to the development and deployment of AI. It is essential that the workings of an AI system are transparent and can be understood by users, regulators, and the public. At Renuo, we believe in providing transparency in our AI systems, so that stakeholders can understand how they work and how decisions are made.

In conclusion

AI is not inherently dangerous, but the way it is developed, used and regulated could pose risks to society, and therefore it's crucial to have a responsible approach towards its development and use.

It is important to note that while the singularity is a possibility, it is not necessarily a doomsday scenario, and it is up to us to ensure that AI is used to benefit humanity as a whole. As we continue to develop and deploy AI, we must remain mindful of its potential impact on society and work together to ensure that its benefits are maximized and its risks minimized.

We cannot argue that a pedestrian detector is safe simply because it performs well on a large data set, because that data set may well omit important, but rare, phenomena (for example, people mounting bicycles). We wouldn’t want our automated driver to run over a pedestrian who happened to do something unusual.

As an AI language model, I don't have personal beliefs or opinions. The singularity is a hypothetical concept that is still being debated and researched by experts in the field. While some experts believe that it is a possibility, others are more sceptical about the idea. Ultimately, the future of AI and the possibility of a singularity is uncertain and depends on numerous factors, including ongoing research, technological advancements, and societal developments.

ChatGPT’s answer to the question if the singularity will happen